🧠 Generic Multicam Flow

The Generic Multicam Flow is a flexible foundation designed for projects that involve single or multiple video streams.

It enables capturing, processing, and analyzing footage from multiple sources simultaneously using AI models of any kind.

This flow provides a ready-to-use architecture that combines real-time inference, session tracking, logging, data capture, and dynamic configuration in a unified system.

⚙️ Global Architecture

The flow is divided into six major subflows:

- Main Flow – Main inference and processing pipeline.

- Monitor – Real-time system supervision and visualization.

- Config – Centralized management of models, cameras, and runtime settings.

- Training – Automatic image capture into structured datasets for future training.

- Log – Event logging, inference recording, and performance tracking.

- Sessions – Session history, results management, and ERP integrations.

Each module can operate independently or as part of an orchestrated session controlled from the Monitor panel.

🎥 Main Flow – Main Inference and Processing Pipeline

Main Flow is the core of the system — where live video streams are ingested, preprocessed, analyzed by AI models, and postprocessed for output.

Components:

- Input: Supports multiple simultaneous video sources (IP cameras, RTSP/RTMP streams, local files, or synthetic feeds).

- Preprocessing: Handles frame normalization, resizing, batching, and filtering before inference.

- Inference: Runs AI models (object detection, segmentation, OCR, recognition, or custom analytics).

- Postprocessing: Applies detection filtering, thresholding, tracking, and result overlays.

- Output: Sends data and visual feedback to the Log, Sessions, and Monitor modules.

Highlights:

- Multi-threaded architecture for parallel camera processing.

- Model-agnostic pipeline supporting PyTorch, TensorFlow, ONNX, and OpenVINO.

- Frame skipping and prioritization for performance optimization.

- Configurable capture triggers (e.g., detections, anomalies, events).

🖥️ Monitor – Real-Time Supervision and Visualization

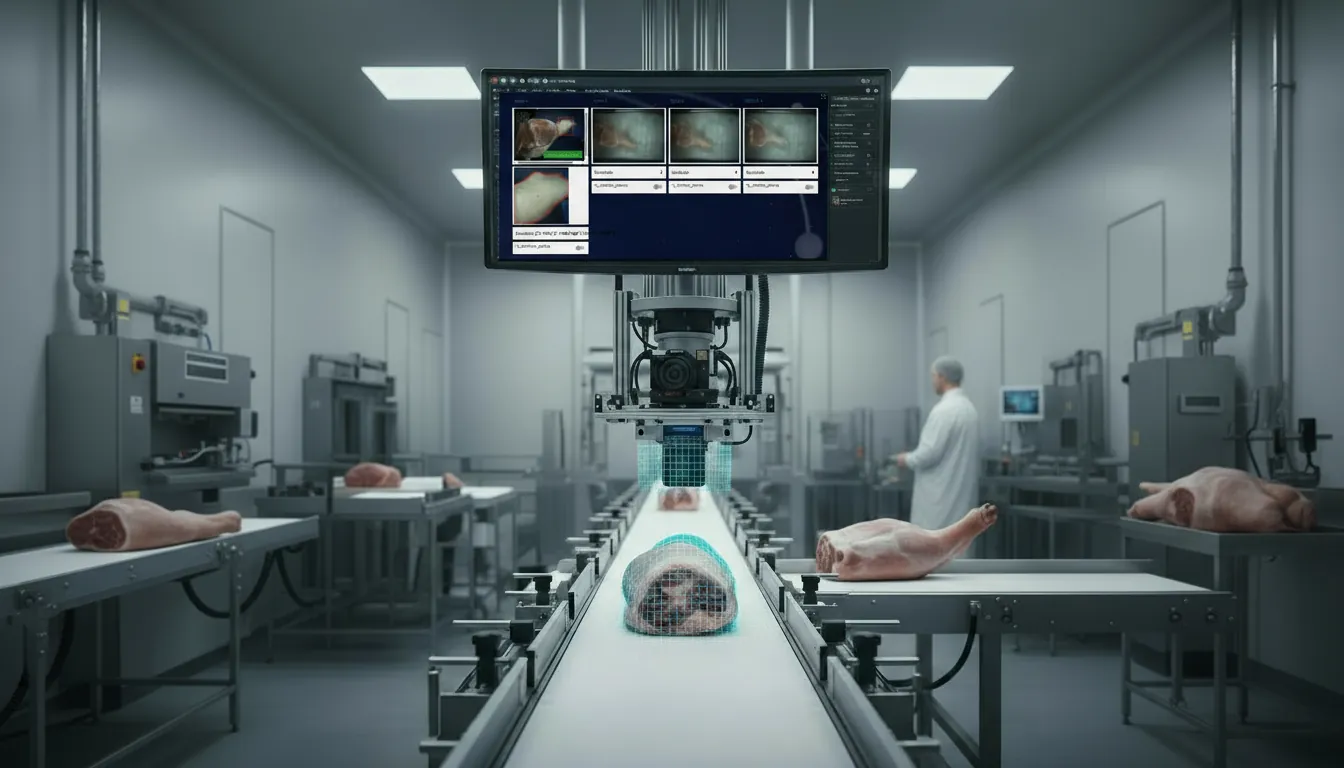

The Monitor subflow provides an operational view of all active cameras, models, and sessions.

It allows users to interact with ongoing processes and control the flow lifecycle directly.

Features:

- Real-time video preview from all active cameras.

- Displays frame rate, latency, and inference statistics per stream.

- Status tracking of model performance and system resources (GPU/CPU usage).

- Alerts for stream loss, errors, or frame drops.

- Start, pause, or stop sessions directly from the dashboard.

Monitor acts as the control center for the entire system, combining live visualization with interactive management tools.

⚙️ Config – Dynamic Configuration Management

The Config module allows live modification of system parameters without stopping the flow.

All updates are validated and applied in real time, ensuring a flexible yet safe environment for experimentation and adaptation.

Core Capabilities:

- Add, edit, or remove cameras and data sources dynamically.

- Change AI models, weights, inference thresholds, and processing options.

- Define rule-based triggers (e.g., capture when object = “person” with confidence > 0.8).

- Persist all settings to storage or database for recovery on restart.

- Hot reloading of new configurations across all modules.

This subflow enables full runtime adaptability — critical for continuous operations in production environments.

🧩 Training – Image Dataset Capture

Unlike a full training pipeline, the Training subflow in this system is dedicated to capturing and organizing images for dataset creation.

Its goal is to automate the collection of relevant frames under specified conditions.

How it works:

- Automatically captures images based on detections or manual triggers.

- Categorizes frames by model labels, camera ID, and timestamp.

- Organizes data into folder structures ready for model training.

- Supports filtering by class, confidence, or time window.

- Works seamlessly with the main inference loop (Main Flow).

This module simplifies dataset creation by transforming live video feeds into structured, ready-to-train image datasets.

📋 Log – Centralized Event and Result Logging

The Log subflow consolidates all system events, results, and statistics into structured records.

Functionality:

- Logs every inference result with timestamps, model name, and camera ID.

- Captures system metrics such as FPS, latency, and resource usage.

- Structured JSON logging for easy integration with external tools.

- Full event audit trail with error and warning tracking.

- Optional export to Elasticsearch, InfluxDB, or CSV files.

This unified logging approach ensures full traceability and simplifies debugging, analytics, and reporting.

📊 Sessions – Session History and Integrations

The Sessions module tracks the lifecycle of all processing sessions started through the Monitor interface.

Each session is recorded, including its metadata, configuration, and results.

Key Features:

- Stores the full history of all past sessions, including camera list, model used, and duration.

- Displays aggregated results and analytics for each session.

- Allows CSV download of session data for offline analysis.

- Integrates with external ERP or data management systems via API.

- Links with the Log and Config modules for synchronized record keeping.

By centralizing session management, this subflow enables deep retrospective analysis, external reporting, and seamless enterprise integration.

🧱 Interconnection Between Subflows

| Subflow | Main Role | Interactions |

|---|---|---|

| Main Flow | Core inference engine | Sends results to Log, Sessions, and Monitor |

| Monitor | UI and live control | Controls Main Flow and triggers Sessions |

| Config | Dynamic settings | Updates Main Flow and Sessions parameters |

| Training | Dataset creation | Receives captures from Main Flow |

| Log | Event tracking | Collects and stores outputs from all modules |

| Sessions | History and export | Aggregates results and supports ERP integrations |

All modules are interconnected through internal channels, ensuring that configuration, execution, and data reporting remain consistent.

💡 Typical Use Cases

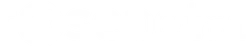

- Real-time multi-camera analytics in retail, industry, or security.

- Object or event detection across distributed video sources.

- Automated data collection for dataset generation and model improvement.

- Project templates for rapid AI prototyping and deployment.

- ERP-integrated analytics dashboards for visual inspection or process tracking.

🧾 Summary

The Generic Multicam Flow by Rosepetal AI offers a robust foundation for any vision-based system requiring multi-camera coordination, AI inference, and structured session management.

It combines modularity, transparency, and extensibility — allowing developers and integrators to build advanced visual intelligence applications with minimal effort.

Ready to Deploy This Flow?

Contact our team to get started with this workflow. We'll help you integrate it into your production line and customize it to your specific needs.